| Service | Microsoft Docs article | Related commit history on GitHub | Change details |

|---|---|---|---|

| active-directory | On Premises Scim Provisioning | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/app-provisioning/on-premises-scim-provisioning.md | The Azure Active Directory (Azure AD) provisioning service supports a [SCIM 2.0] - Administrator role for installing the agent. This task is a one-time effort and should be an Azure account that's either a hybrid administrator or a global administrator. - Administrator role for configuring the application in the cloud (application administrator, cloud application administrator, global administrator, or a custom role with permissions). -## On-premises app provisioning to SCIM-enabled apps -To provision users to SCIM-enabled apps: -- 1. [Download](https://aka.ms/OnPremProvisioningAgent) the provisioning agent and copy it onto the virtual machine or server that your SCIM endpoint is hosted on. - 1. Open the provisioning agent installer, agree to the terms of service, and select **Install**. - 1. Open the provisioning agent wizard, and select **On-premises provisioning** when prompted for the extension you want to enable. - 1. Provide credentials for an Azure AD administrator when you're prompted to authorize. Hybrid administrator or global administrator is required. - 1. Select **Confirm** to confirm the installation was successful. - 1. Navigate to the Azure Portal and add the **On-premises SCIM app** from the [gallery](../../active-directory/manage-apps/add-application-portal.md). - 1. Select **On-Premises Connectivity**, and download the provisioning agent. 1. Go back to your application, and select **On-Premises Connectivity**. - 1. Select the agent that you installed from the dropdown list, and select **Assign Agent(s)**. - 1. Wait 20 minutes prior to completing the next step, to provide time for the agent assignment to complete. - 1. Provide the URL for your SCIM endpoint in the **Tenant URL** box. An example is https://localhost:8585/scim. -  - 1. Select **Test Connection**, and save the credentials. Use the steps [here](on-premises-ecma-troubleshoot.md#troubleshoot-test-connection-issues) if you run into connectivity issues. - 1. Configure any [attribute mappings](customize-application-attributes.md) or [scoping](define-conditional-rules-for-provisioning-user-accounts.md) rules required for your application. - 1. Add users to scope by [assigning users and groups](../../active-directory/manage-apps/add-application-portal-assign-users.md) to the application. - 1. Test provisioning a few users [on demand](provision-on-demand.md). - 1. Add more users into scope by assigning them to your application. - 1. Go to the **Provisioning** pane, and select **Start provisioning**. - 1. Monitor using the [provisioning logs](../../active-directory/reports-monitoring/concept-provisioning-logs.md). +## Deploying Azure AD provisioning agent +The Azure AD Provisioning agent can be deployed on the same server hosting a SCIM enabled application, or a seperate server, providing it has line of sight to the application's SCIM endpoint. A single agent also supports provision to multiple applications hosted locally on the same server or seperate hosts, again as long as each SCIM endpoint is reachable by the agent. ++ 1. [Download](https://aka.ms/OnPremProvisioningAgent) the provisioning agent and copy it onto the virtual machine or server that your SCIM application endpoint is hosted on. + 2. Run the provisioning agent installer, agree to the terms of service, and select **Install**. + 3. Once installed, locate and launch the **AAD Connect Provisioning Agent wizard**, and when prompted for an extensions select **On-premises provisioning** + 4. For the agent to register itself with your tenant, provide credentials for an Azure AD admin with Hybrid administrator or global administrator permissions. + 5. Select **Confirm** to confirm the installation was successful. + +## Provisioning to SCIM-enabled application +Once the agent is installed, no further configuration is necesary on-prem, and all provisioning configurations are then managed from the portal. Repeat the below steps for every on-premises application being provisioned via SCIM. + + 1. In the Azure portal navigate to the Enterprise applications and add the **On-premises SCIM app** from the [gallery](../../active-directory/manage-apps/add-application-portal.md). + 2. From the left hand menu navigate to the **Provisioning** option and select **Get started**. + 3. Select **Automatic** from the dropdown list and expand the **On-Premises Connectivity** option. + 4. Select the agent that you installed from the dropdown list and select **Assign Agent(s)**. + 5. Now either wait 10 minutes or restart the **Microsoft Azure AD Connect Provisioning Agent** before proceeding to the next step & testing the connection. + 6. In the **Tenant URL** field, provide the SCIM endpoint URL for your application. The URL is typically unique to each target application and must be resolveable by DNS. An example for a scenario where the agent is installed on the same host as the application is https://localhost:8585/scim  + 7. Select **Test Connection**, and save the credentials. The application SCIM endpoint must be actively listening for inbound provisioning requests, otherwise the test will fail. Use the steps [here](on-premises-ecma-troubleshoot.md#troubleshoot-test-connection-issues) if you run into connectivity issues. + 8. Configure any [attribute mappings](customize-application-attributes.md) or [scoping](define-conditional-rules-for-provisioning-user-accounts.md) rules required for your application. + 9. Add users to scope by [assigning users and groups](../../active-directory/manage-apps/add-application-portal-assign-users.md) to the application. + 10. Test provisioning a few users [on demand](provision-on-demand.md). + 11. Add more users into scope by assigning them to your application. + 12. Go to the **Provisioning** pane, and select **Start provisioning**. + 13. Monitor using the [provisioning logs](../../active-directory/reports-monitoring/concept-provisioning-logs.md). ## Additional requirements * Ensure your [SCIM](https://techcommunity.microsoft.com/t5/identity-standards-blog/provisioning-with-scim-getting-started/ba-p/880010) implementation meets the [Azure AD SCIM requirements](use-scim-to-provision-users-and-groups.md). |

| active-directory | Access Tokens | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/develop/access-tokens.md | Don't use mutable, human-readable identifiers like `email` or `upn` for uniquely #### Validate application sign-in -Use the `scp` claim to validate that the user has granted the calling application permission to call the API. Ensure the calling client is allowed to call the API using the `appid` claim. +* Use the `scp` claim to validate that the user has granted the calling app permission to call your API. +* Ensure the calling client is allowed to call your API using the `appid` claim (for v1.0 tokens) or the `azp` claim (for v2.0 tokens). + * You only need to validate these claims (`appid`, `azp`) if you want to restrict your web API to be called only by pre-determined applications (e.g., line-of-business applications or web APIs called by well-known frontends). APIs intended to allow access from any calling application do not need to validate these claims. ## User and application tokens |

| active-directory | Id Tokens | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/develop/id-tokens.md | The table below shows the claims that are in most ID tokens by default (except w |`roles`| Array of strings | The set of roles that were assigned to the user who is logging in. | |`rh` | Opaque String |An internal claim used by Azure to revalidate tokens. Should be ignored. | |`sub` | String | The principal about which the token asserts information, such as the user of an app. This value is immutable and cannot be reassigned or reused. The subject is a pairwise identifier - it is unique to a particular application ID. If a single user signs into two different apps using two different client IDs, those apps will receive two different values for the subject claim. This may or may not be wanted depending on your architecture and privacy requirements. |-|`tid` | String, a GUID | Represents the tenant that the user is signing in to. For work and school accounts, the GUID is the immutable tenant ID of the organization that the user is signing in to. For sign-ins to the personal Microsoft account tenant (services like Xbox, Teams for Life, or Outlook), the value is `9188040d-6c67-4c5b-b112-36a304b66dad`. To receive this claim, your app must request the `profile` scope. | +|`tid` | String, a GUID | Represents the tenant that the user is signing in to. For work and school accounts, the GUID is the immutable tenant ID of the organization that the user is signing in to. For sign-ins to the personal Microsoft account tenant (services like Xbox, Teams for Life, or Outlook), the value is `9188040d-6c67-4c5b-b112-36a304b66dad`.| | `unique_name` | String | Only present in v1.0 tokens. Provides a human readable value that identifies the subject of the token. This value is not guaranteed to be unique within a tenant and should be used only for display purposes. | | `uti` | String | Token identifier claim, equivalent to `jti` in the JWT specification. Unique, per-token identifier that is case-sensitive.| |`ver` | String, either 1.0 or 2.0 | Indicates the version of the id_token. | |

| active-directory | Howto Vm Sign In Azure Ad Linux | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/devices/howto-vm-sign-in-azure-ad-linux.md | There are two ways to configure role assignments for a VM: - Azure Cloud Shell experience > [!NOTE]-> The Virtual Machine Administrator Login and Virtual Machine User Login roles use `dataActions` and can be assigned at the management group, subscription, resource group, or resource scope. We recommend that you assign the roles at the management group, subscription, or resource level and not at the individual VM level. This practice avoids the risk of reaching the [Azure role assignments limit](../../role-based-access-control/troubleshooting.md#azure-role-assignments-limit) per subscription. +> The Virtual Machine Administrator Login and Virtual Machine User Login roles use `dataActions` and can be assigned at the management group, subscription, resource group, or resource scope. We recommend that you assign the roles at the management group, subscription, or resource level and not at the individual VM level. This practice avoids the risk of reaching the [Azure role assignments limit](../../role-based-access-control/troubleshooting.md#limits) per subscription. ### Azure AD portal If you get a message that says the token couldn't be retrieved from the local ca ### Access denied: Azure role not assigned -If you see an "Azure role not assigned" error on your SSH prompt, verify that you've configured Azure RBAC policies for the VM that grants the user either the Virtual Machine Administrator Login role or the Virtual Machine User Login role. If you're having problems with Azure role assignments, see the article [Troubleshoot Azure RBAC](../../role-based-access-control/troubleshooting.md#azure-role-assignments-limit). +If you see an "Azure role not assigned" error on your SSH prompt, verify that you've configured Azure RBAC policies for the VM that grants the user either the Virtual Machine Administrator Login role or the Virtual Machine User Login role. If you're having problems with Azure role assignments, see the article [Troubleshoot Azure RBAC](../../role-based-access-control/troubleshooting.md#limits). ### Problems deleting the old (AADLoginForLinux) extension |

| active-directory | Howto Vm Sign In Azure Ad Windows | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/devices/howto-vm-sign-in-azure-ad-windows.md | You might get the following error message when you initiate a remote desktop con Verify that you've [configured Azure RBAC policies](../../virtual-machines/linux/login-using-aad.md) for the VM that grant the user the Virtual Machine Administrator Login or Virtual Machine User Login role. > [!NOTE]-> If you're having problems with Azure role assignments, see [Troubleshoot Azure RBAC](../../role-based-access-control/troubleshooting.md#azure-role-assignments-limit). +> If you're having problems with Azure role assignments, see [Troubleshoot Azure RBAC](../../role-based-access-control/troubleshooting.md#limits). ### Unauthorized client or password change required |

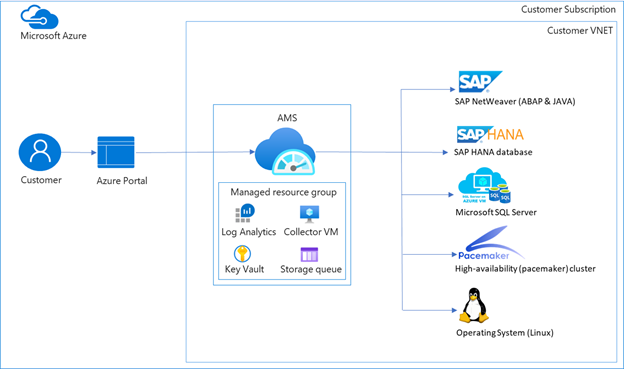

| active-directory | Scenario Azure First Sap Identity Integration | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/fundamentals/scenario-azure-first-sap-identity-integration.md | This document provides advice on the technical design and configuration of SAP p | [IPS](https://help.sap.com/viewer/f48e822d6d484fa5ade7dda78b64d9f5/Cloud/en-US/2d2685d469a54a56b886105a06ccdae6.html) | SAP Cloud Identity Services - Identity Provisioning Service. IPS helps to synchronize identities between different stores / target systems. | | [XSUAA](https://blogs.sap.com/2019/01/07/uaa-xsuaa-platform-uaa-cfuaa-what-is-it-all-about/) | Extended Services for Cloud Foundry User Account and Authentication. XSUAA is a multi-tenant OAuth authorization server within the SAP BTP. | | [CF](https://www.cloudfoundry.org/) | Cloud Foundry. Cloud Foundry is the environment on which SAP built their multi-cloud offering for BTP (AWS, Azure, GCP, Alibaba). |-| [Fiori](https://www.sap.com/products/fiori/develop.html) | The web-based user experience of SAP (as opposed to the desktop-based experience). | +| [Fiori](https://www.sap.com/products/fiori.html) | The web-based user experience of SAP (as opposed to the desktop-based experience). | ## Overview Azure AD B2C doesn't natively support the use of groups to create collections of Fortunately, Azure AD B2C is highly customizable, so you can configure the SAML tokens it sends to IAS to include any custom information. For various options on supporting authorization claims, see the documentation accompanying the [Azure AD B2C App Roles sample](https://github.com/azure-ad-b2c/api-connector-samples/tree/main/Authorization-AppRoles), but in summary: through its [API Connector](../../active-directory-b2c/api-connectors-overview.md) extensibility mechanism you can optionally still use groups, app roles, or even a custom database to determine what the user is allowed to access. -Regardless of where the authorization information comes from, it can then be emitted as the `Groups` attribute inside the SAML token by configuring that attribute name as the [default partner claim type on the claims schema](../../active-directory-b2c/claimsschema.md#defaultpartnerclaimtypes) or by overriding the [partner claim type on the output claims](../../active-directory-b2c/relyingparty.md#outputclaims). Note however that BTP allows you to [map Role Collections to User Attributes](https://help.sap.com/products/BTP/65de2977205c403bbc107264b8eccf4b/b3fbb1a9232d4cf99967a0b29dd85d4c.html), which means that *any* attribute name can be used for authorization decisions, even if you don't use the `Groups` attribute name. +Regardless of where the authorization information comes from, it can then be emitted as the `Groups` attribute inside the SAML token by configuring that attribute name as the [default partner claim type on the claims schema](../../active-directory-b2c/claimsschema.md#defaultpartnerclaimtypes) or by overriding the [partner claim type on the output claims](../../active-directory-b2c/relyingparty.md#outputclaims). Note however that BTP allows you to [map Role Collections to User Attributes](https://help.sap.com/products/BTP/65de2977205c403bbc107264b8eccf4b/b3fbb1a9232d4cf99967a0b29dd85d4c.html), which means that *any* attribute name can be used for authorization decisions, even if you don't use the `Groups` attribute name. |

| active-directory | Create Service Principal Cross Tenant | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/manage-apps/create-service-principal-cross-tenant.md | + + Title: 'Create an enterprise application from a multi-tenant application' +description: Create an enterprise application using the client ID for a multi-tenant application. +++++++ Last updated : 07/26/2022++++zone_pivot_groups: enterprise-apps-cli +++#Customer intent: As an administrator of an Azure AD tenant, I want to create an enterprise application using client ID for a multi-tenant application provided by a service provider or independent software vendor. +++# Create an enterprise application from a multi-tenant application in Azure Active Directory ++In this article, you'll learn how to create an enterprise application in your tenant using the client ID for a multi-tenant application. An enterprise application refers to a service principal within a tenant. The service principal discussed in this article is the local representation, or application instance, of a global application object in a single tenant or directory. ++Before you proceed to add the application using any of these options, check whether the enterprise application is already in your tenant by attempting to sign in to the application. If the sign-in is successful, the enterprise application already exists in your tenant. ++If you have verified that the application isn't in your tenant, proceed with any of the following ways to add the enterprise application to your tenant using the appId ++## Prerequisites ++To add an enterprise application to your Azure AD tenant, you need: ++- An Azure AD user account. If you don't already have one, you can [Create an account for free](https://azure.microsoft.com/free/?WT.mc_id=A261C142F). +- One of the following roles: Global Administrator, Cloud Application Administrator, or Application Administrator. +- The client ID of the multi-tenant application. +++## Create an enterprise application +++If you've been provided with the admin consent URL, navigate to the URL through a web browser to [grant tenant-wide admin consent](grant-admin-consent.md) to the application. Granting tenant-wide admin consent to the application will add it to your tenant. The tenant-wide admin consent URL has the following format: ++```http +https://login.microsoftonline.com/common/oauth2/authorize?response_type=code&client_id=248e869f-0e5c-484d-b5ea1fba9563df41&redirect_uri=https://www.your-app-url.com +``` +where: ++- `{client-id}` is the application's client ID (also known as appId). ++++1. Run `connect-MgGraph -Scopes "Application.ReadWrite.All"` and sign in with a Global Admin user account. +1. Run the following command to create the enterprise application: ++ ```powershell + New-MgServicePrincipal -AppId fc876dd1-6bcb-4304-b9b6-18ddf1526b62 + ``` +1. To delete the enterprise application you created, run the command: ++ ```powershell + Remove-MgServicePrincipal + -ServicePrincipalId <objectID> + ``` ++From the Microsoft Graph explorer window: ++1. To create the enterprise application, insert the following query: + + ```http + POST /servicePrincipals. + ``` +1. Supply the following request in the **Request body**. ++ { + "appId": "fc876dd1-6bcb-4304-b9b6-18ddf1526b62" + } +1. Grant the Application.ReadWrite.All permission under the **Modify permissions** tab and select **Run query**. ++1. To delete the enterprise application you created, run the query: ++ ```http + DELETE /servicePrincipals/{objectID} + ``` +1. To create the enterprise application, run the following command: + + ```azurecli + az ad sp create --id fc876dd1-6bcb-4304-b9b6-18ddf1526b62 + ``` ++1. To delete the enterprise application you created, run the command: ++ ```azurecli + az ad sp delete --id + ``` +++## Next steps ++- [Add RBAC role to the enterprise application](/azure/role-based-access-control/role-assignments-portal) +- [Assign users to your application](add-application-portal-assign-users.md) |

| active-directory | What Is Application Management | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/manage-apps/what-is-application-management.md | To [manage access](what-is-access-management.md) for an application, you want to You can [manage user consent settings](configure-user-consent.md) to choose whether users can allow an application or service to access user profiles and organizational data. When applications are granted access, users can sign in to applications integrated with Azure AD, and the application can access your organization's data to deliver rich data-driven experiences. -Users often are unable to consent to the permissions an application is requesting. Configure the [admin consent workflow](configure-admin-consent-workflow.md) to allow users to provide a justification and request an administrator's review and approval of an application. +Users often are unable to consent to the permissions an application is requesting. Configure the admin consent workflow to allow users to provide a justification and request an administrator's review and approval of an application. For training on how to configure admin consent workflow in your Azure AD tenant, see [Configure admin consent workflow](/learn/modules/configure-admin-consent-workflow). As an administrator, you can [grant tenant-wide admin consent](grant-admin-consent.md) to an application. Tenant-wide admin consent is necessary when an application requires permissions that regular users aren't allowed to grant, and allows organizations to implement their own review processes. Always carefully review the permissions the application is requesting before granting consent. When an application has been granted tenant-wide admin consent, all users are able to sign into the application unless it has been configured to require user assignment. |

| active-directory | Managed Identity Best Practice Recommendations | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/managed-identities-azure-resources/managed-identity-best-practice-recommendations.md | You'll need to manually delete a user-assigned identity when it's no longer requ Role assignments aren't automatically deleted when either system-assigned or user-assigned managed identities are deleted. These role assignments should be manually deleted so the limit of role assignments per subscription isn't exceeded. Role assignments that are associated with deleted managed identities-will be displayed with ΓÇ£Identity not foundΓÇ¥ when viewed in the portal. [Read more](../../role-based-access-control/troubleshooting.md#role-assignments-with-identity-not-found). +will be displayed with ΓÇ£Identity not foundΓÇ¥ when viewed in the portal. [Read more](../../role-based-access-control/troubleshooting.md#symptomrole-assignments-with-identity-not-found). :::image type="content" source="media/managed-identity-best-practice-recommendations/identity-not-found.png" alt-text="Identity not found for role assignment."::: |

| active-directory | Azure Pim Resource Rbac | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/azure-pim-resource-rbac.md | Title: View audit report for Azure resource roles in Privileged Identity Managem description: View activity and audit history for Azure resource roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Concept Privileged Access Versus Role Assignable | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/concept-privileged-access-versus-role-assignable.md | Title: What's the difference between Privileged Access groups and role-assignabl description: Learn how to tell the difference between Privileged Access groups and role-assignable groups in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Groups Activate Roles | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-activate-roles.md | Title: Activate privileged access group roles in PIM - Azure AD | Microsoft Docs description: Learn how to activate your privileged access group roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 02/24/2022-+ |

| active-directory | Groups Approval Workflow | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-approval-workflow.md | Title: Approve activation requests for group members and owners in Privileged Id description: Learn how to approve or deny requests for role-assignable groups in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Groups Assign Member Owner | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-assign-member-owner.md | Title: Assign eligible owners and members for privileged access groups - Azure A description: Learn how to assign eligible owners or members of a role-assignable group in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Groups Audit | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-audit.md | Title: View audit report for privileged access group assignments in Privileged I description: View activity and audit history for privileged access group assignments in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Groups Discover Groups | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-discover-groups.md | Title: Identify a group to manage in Privileged Identity Management - Azure AD | description: Learn how to onboard role-assignable groups to manage as privileged access groups in Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Groups Features | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-features.md | Title: Managing Privileged Access groups in Privileged Identity Management (PIM) description: How to manage members and owners of privileged access groups in Privileged Identity Management (PIM) documentationcenter: ''-+ ms.assetid: |

| active-directory | Groups Renew Extend | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-renew-extend.md | Title: Renew expired group owner or member assignments in Privileged Identity Ma description: Learn how to extend or renew role-assignable group assignments in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Groups Role Settings | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/groups-role-settings.md | Title: Configure privileged access groups settings in PIM - Azure Active Directo description: Learn how to configure role-assignable groups settings in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Pim Apis | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-apis.md | Title: API concepts in Privileged Identity management - Azure AD | Microsoft Doc description: Information for understanding the APIs in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' The only link between the PIM entity and the role assignment entity for persiste ## Next steps -- [Azure AD Privileged Identity Management API reference](/graph/api/resources/privilegedidentitymanagementv3-overview)+- [Azure AD Privileged Identity Management API reference](/graph/api/resources/privilegedidentitymanagementv3-overview) |

| active-directory | Pim Complete Azure Ad Roles And Resource Roles Review | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-complete-azure-ad-roles-and-resource-roles-review.md | Title: Complete an access review of Azure resource and Azure AD roles in PIM - A description: Learn how to complete an access review of Azure resource and Azure AD roles Privileged Identity Management in Azure Active Directory. documentationcenter: ''-+ editor: '' na Last updated 10/07/2021-+ |

| active-directory | Pim Configure | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-configure.md | Title: What is Privileged Identity Management? - Azure AD | Microsoft Docs description: Provides an overview of Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim Create Azure Ad Roles And Resource Roles Review | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-create-azure-ad-roles-and-resource-roles-review.md | Title: Create an access review of Azure resource and Azure AD roles in PIM - Azu description: Learn how to create an access review of Azure resource and Azure AD roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim Deployment Plan | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-deployment-plan.md | Title: Plan a Privileged Identity Management deployment - Azure AD | Microsoft D description: Learn how to deploy Privileged Identity Management (PIM) in your Azure AD organization. documentationcenter: ''-+ editor: '' |

| active-directory | Pim Email Notifications | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-email-notifications.md | Title: Email notifications in Privileged Identity Management (PIM) - Azure Activ description: Describes email notifications in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 10/07/2021-+ |

| active-directory | Pim Getting Started | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-getting-started.md | Title: Start using PIM - Azure Active Directory | Microsoft Docs description: Learn how to enable and get started using Azure AD Privileged Identity Management (PIM) in the Azure portal. documentationcenter: ''-+ editor: '' |

| active-directory | Pim How To Activate Role | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-activate-role.md | Title: Activate Azure AD roles in PIM - Azure Active Directory | Microsoft Docs description: Learn how to activate Azure AD roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim How To Add Role To User | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-add-role-to-user.md | Title: Assign Azure AD roles in PIM - Azure Active Directory | Microsoft Docs description: Learn how to assign Azure AD roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim How To Change Default Settings | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-change-default-settings.md | Title: Configure Azure AD role settings in PIM - Azure AD | Microsoft Docs description: Learn how to configure Azure AD role settings in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim How To Configure Security Alerts | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-configure-security-alerts.md | Title: Security alerts for Azure AD roles in PIM - Azure AD | Microsoft Docs description: Configure security alerts for Azure AD roles Privileged Identity Management in Azure Active Directory. documentationcenter: ''-+ editor: '' Customize settings on the different alerts to work with your environment and sec ## Next steps -- [Configure Azure AD role settings in Privileged Identity Management](pim-how-to-change-default-settings.md)+- [Configure Azure AD role settings in Privileged Identity Management](pim-how-to-change-default-settings.md) |

| active-directory | Pim How To Renew Extend | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-renew-extend.md | Title: Renew Azure AD role assignments in PIM - Azure Active Directory | Microso description: Learn how to extend or renew Azure Active Directory role assignments in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' na Last updated 06/24/2022-+ |

| active-directory | Pim How To Require Mfa | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-require-mfa.md | Title: MFA or 2FA and Privileged Identity Management - Azure AD | Microsoft Docs description: Learn how Azure AD Privileged Identity Management (PIM) validates multifactor authentication (MFA). documentationcenter: ''-+ editor: '' |

| active-directory | Pim How To Use Audit Log | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-how-to-use-audit-log.md | Title: View audit log report for Azure AD roles in Azure AD PIM | Microsoft Docs description: Learn how to view the audit log history for Azure AD roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Pim Perform Azure Ad Roles And Resource Roles Review | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-perform-azure-ad-roles-and-resource-roles-review.md | Title: Perform an access review of Azure resource and Azure AD roles in PIM - Az description: Learn how to review access of Azure resource and Azure AD roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' na Last updated 10/07/2021-+ |

| active-directory | Pim Resource Roles Activate Your Roles | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-activate-your-roles.md | Title: Activate Azure resource roles in PIM - Azure AD | Microsoft Docs description: Learn how to activate your Azure resource roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ -Use Privileged Identity Management (PIM) in Azure Active Diretory (Azure AD), part of Microsoft Entra, to allow eligible role members for Azure resources to schedule activation for a future date and time. They can also select a specific activation duration within the maximum (configured by administrators). +Use Privileged Identity Management (PIM) in Azure Active Directory (Azure AD), part of Microsoft Entra, to allow eligible role members for Azure resources to schedule activation for a future date and time. They can also select a specific activation duration within the maximum (configured by administrators). This article is for members who need to activate their Azure resource role in Privileged Identity Management. |

| active-directory | Pim Resource Roles Approval Workflow | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-approval-workflow.md | Title: Approve requests for Azure resource roles in PIM - Azure AD | Microsoft D description: Learn how to approve or deny requests for Azure resource roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Pim Resource Roles Assign Roles | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-assign-roles.md | Title: Assign Azure resource roles in Privileged Identity Management - Azure Act description: Learn how to assign Azure resource roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Pim Resource Roles Configure Alerts | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-configure-alerts.md | Title: Configure security alerts for Azure roles in Privileged Identity Manageme description: Learn how to configure security alerts for Azure resource roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Pim Resource Roles Configure Role Settings | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-configure-role-settings.md | Title: Configure Azure resource role settings in PIM - Azure AD | Microsoft Docs description: Learn how to configure Azure resource role settings in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/24/2022-+ |

| active-directory | Pim Resource Roles Custom Role Policy | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-custom-role-policy.md | Title: Use Azure custom roles in PIM - Azure AD | Microsoft Docs description: Learn how to use Azure custom roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/27/2022-+ Finally, [assign roles](pim-resource-roles-assign-roles.md) to the distinct grou ## Next steps - [Configure Azure resource role settings in Privileged Identity Management](pim-resource-roles-configure-role-settings.md)-- [Custom roles in Azure](../../role-based-access-control/custom-roles.md)+- [Custom roles in Azure](../../role-based-access-control/custom-roles.md) |

| active-directory | Pim Resource Roles Discover Resources | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-discover-resources.md | Title: Discover Azure resources to manage in PIM - Azure AD | Microsoft Docs description: Learn how to discover Azure resources to manage in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ na Last updated 06/27/2022-+ |

| active-directory | Pim Resource Roles Overview Dashboards | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-overview-dashboards.md | Title: Resource dashboards for access reviews in PIM - Azure AD | Microsoft Docs description: Describes how to use a resource dashboard to perform an access review in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: markwahl-msft na Last updated 06/27/2022-+ Below the charts are listed the number of users and groups with new role assignm ## Next steps -- [Start an access review for Azure resource roles in Privileged Identity Management](./pim-create-azure-ad-roles-and-resource-roles-review.md)+- [Start an access review for Azure resource roles in Privileged Identity Management](./pim-create-azure-ad-roles-and-resource-roles-review.md) |

| active-directory | Pim Resource Roles Renew Extend | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-resource-roles-renew-extend.md | Title: Renew Azure resource role assignments in PIM - Azure AD | Microsoft Docs description: Learn how to extend or renew Azure resource role assignments in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' na Last updated 10/19/2021-+ |

| active-directory | Pim Roles | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-roles.md | Title: Roles you cannot manage in Privileged Identity Management - Azure Active description: Describes the roles you cannot manage in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' We support all Microsoft 365 roles in the Azure AD Roles and Administrators port ## Next steps - [Assign Azure AD roles in Privileged Identity Management](pim-how-to-add-role-to-user.md)-- [Assign Azure resource roles in Privileged Identity Management](pim-resource-roles-assign-roles.md)+- [Assign Azure resource roles in Privileged Identity Management](pim-resource-roles-assign-roles.md) |

| active-directory | Pim Security Wizard | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-security-wizard.md | Title: Azure AD roles Discovery and insights (preview) in Privileged Identity Ma description: Discovery and insights (formerly Security Wizard) help you convert permanent Azure AD role assignments to just-in-time assignments with Privileged Identity Management. documentationcenter: ''-+ editor: '' |

| active-directory | Pim Troubleshoot | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/pim-troubleshoot.md | Title: Troubleshoot resource access denied in Privileged Identity Management - A description: Learn how to troubleshoot system errors with roles in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' Assign the User Access Administrator role to the Privileged identity Management - [License requirements to use Privileged Identity Management](subscription-requirements.md) - [Securing privileged access for hybrid and cloud deployments in Azure AD](../roles/security-planning.md?toc=%2fazure%2factive-directory%2fprivileged-identity-management%2ftoc.json)-- [Deploy Privileged Identity Management](pim-deployment-plan.md)+- [Deploy Privileged Identity Management](pim-deployment-plan.md) |

| active-directory | Powershell For Azure Ad Roles | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/powershell-for-azure-ad-roles.md | Title: PowerShell for Azure AD roles in PIM - Azure AD | Microsoft Docs description: Manage Azure AD roles using PowerShell cmdlets in Azure AD Privileged Identity Management (PIM). documentationcenter: ''-+ editor: '' |

| active-directory | Subscription Requirements | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/privileged-identity-management/subscription-requirements.md | If an Azure AD Premium P2, EMS E5, or trial license expires, Privileged Identity - [Start using Privileged Identity Management](pim-getting-started.md) - [Roles you can't manage in Privileged Identity Management](pim-roles.md) - [Create an access review of Azure resource roles in PIM](./pim-create-azure-ad-roles-and-resource-roles-review.md)-- [Create an access review of Azure AD roles in PIM](./pim-create-azure-ad-roles-and-resource-roles-review.md)+- [Create an access review of Azure AD roles in PIM](./pim-create-azure-ad-roles-and-resource-roles-review.md) |

| active-directory | 8X8 Provisioning Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/8x8-provisioning-tutorial.md | This tutorial describes the steps you need to perform in both 8x8 Admin Console ## Capabilities supported > [!div class="checklist"] > * Create users in 8x8-> * Remove users in 8x8 when they do not require access anymore +> * Deactivate users in 8x8 when they do not require access anymore > * Keep user attributes synchronized between Azure AD and 8x8 > * [Single sign-on](./8x8virtualoffice-tutorial.md) to 8x8 (recommended) This section guides you through the steps to configure 8x8 to support provisioni ### To configure a user provisioning access token in 8x8 Admin Console: -1. Sign in to [Admin Console](https://admin.8x8.com). Select **Identity Management**. +1. Sign in to [Admin Console](https://admin.8x8.com). Select **Identity and Security**. -  + [  ](./media/8x8-provisioning-tutorial/8x8-identity-and-security.png#lightbox) -2. Click the **Show user provisioning information** link to generate a token. +2. In the **User Provisioning Integration (SCIM)** pane, click the toggle to enable and then click **Save**. -  + [  ](./media/8x8-provisioning-tutorial/8x8-enable-user-provisioning.png#lightbox) 3. Copy the **8x8 URL** and **8x8 API Token** values. These values will be entered in the **Tenant URL** and **Secret Token** fields respectively in the Provisioning tab of your 8x8 application in the Azure portal. -  + [  ](./media/8x8-provisioning-tutorial/8x8-copy-url-token.png#lightbox) ## Step 3. Add 8x8 from the Azure AD application gallery This section guides you through the steps to configure the Azure AD provisioning 1. Sign in to the [Azure portal](https://portal.azure.com). Select **Enterprise Applications**, then select **All applications**. -  +  -  +  2. In the applications list, select **8x8**. -  +  3. Select the **Provisioning** tab. Click on **Get started**.  -  +  4. Set the **Provisioning Mode** to **Automatic**. This section guides you through the steps to configure the Azure AD provisioning 6. In the **Notification Email** field, enter the email address of a person or group who should receive the provisioning error notifications and select the **Send an email notification when a failure occurs** check box. -  +  7. Select **Save**. Once you've configured provisioning, use the following resources to monitor your ## Next steps -* [Learn how to review logs and get reports on provisioning activity](../app-provisioning/check-status-user-account-provisioning.md) +* [Learn how to review logs and get reports on provisioning activity](../app-provisioning/check-status-user-account-provisioning.md) |

| active-directory | Articulate360 Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/articulate360-tutorial.md | Follow these steps to enable Azure AD SSO in the Azure portal.  -1. In addition to above, Articulate 360 application expects few more attributes to be passed back in SAML response, which are shown below. These attributes are also pre populated but you can review them as per your requirements. +1. Articulate 360 application expects the default attributes to be replaced with the specific attributes as shown below. These attributes are also pre populated but you can review them as per your requirements. | Name | Source Attribute| | | | |

| active-directory | Aws Clientvpn Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/aws-clientvpn-tutorial.md | Follow these steps to enable Azure AD SSO in the Azure portal. 1. Click on **Manifest** and you need to keep the Reply URL as **http** instead of **https** to get the integration working, click on **Save**. -  +  1. AWS ClientVPN application expects the SAML assertions in a specific format, which requires you to add custom attribute mappings to your SAML token attributes configuration. The following screenshot shows the list of default attributes. Follow these steps to enable Azure AD SSO in the Azure portal. | Name | Source Attribute| | -- | | | memberOf | user.groups |+ | FirstName | user.givenname | + | LastName | user.surname | 1. On the **Set up single sign-on with SAML** page, in the **SAML Signing Certificate** section, find **Federation Metadata XML** and select **Download** to download the certificate and save it on your computer.  +1. In the **SAML Signing Certificate** section, click the edit icon and change the **Signing Option** to **Sign SAML response and assertion**. Click **Save**. ++  + 1. On the **Set up AWS ClientVPN** section, copy the appropriate URL(s) based on your requirement.  |

| active-directory | Cheetah For Benelux Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/cheetah-for-benelux-tutorial.md | + + Title: 'Tutorial: Azure AD SSO integration with Cheetah For Benelux' +description: Learn how to configure single sign-on between Azure Active Directory and Cheetah For Benelux. ++++++++ Last updated : 07/21/2022+++++# Tutorial: Azure AD SSO integration with Cheetah For Benelux ++In this tutorial, you'll learn how to integrate Cheetah For Benelux with Azure Active Directory (Azure AD). When you integrate Cheetah For Benelux with Azure AD, you can: ++* Control in Azure AD who has access to Cheetah For Benelux. +* Enable your users to be automatically signed-in to Cheetah For Benelux with their Azure AD accounts. +* Manage your accounts in one central location - the Azure portal. ++## Prerequisites ++To get started, you need the following items: ++* An Azure AD subscription. If you don't have a subscription, you can get a [free account](https://azure.microsoft.com/free/). +* Cheetah For Benelux single sign-on (SSO) enabled subscription. +* Along with Cloud Application Administrator, Application Administrator can also add or manage applications in Azure AD. +For more information, see [Azure built-in roles](../roles/permissions-reference.md). ++## Scenario description ++In this tutorial, you configure and test Azure AD SSO in a test environment. ++* Cheetah For Benelux supports **SP** initiated SSO. +* Cheetah For Benelux supports **Just In Time** user provisioning. ++> [!NOTE] +> Identifier of this application is a fixed string value so only one instance can be configured in one tenant. ++## Add Cheetah For Benelux from the gallery ++To configure the integration of Cheetah For Benelux into Azure AD, you need to add Cheetah For Benelux from the gallery to your list of managed SaaS apps. ++1. Sign in to the Azure portal using either a work or school account, or a personal Microsoft account. +1. On the left navigation pane, select the **Azure Active Directory** service. +1. Navigate to **Enterprise Applications** and then select **All Applications**. +1. To add new application, select **New application**. +1. In the **Add from the gallery** section, type **Cheetah For Benelux** in the search box. +1. Select **Cheetah For Benelux** from results panel and then add the app. Wait a few seconds while the app is added to your tenant. ++## Configure and test Azure AD SSO for Cheetah For Benelux ++Configure and test Azure AD SSO with Cheetah For Benelux using a test user called **B.Simon**. For SSO to work, you need to establish a link relationship between an Azure AD user and the related user in Cheetah For Benelux. ++To configure and test Azure AD SSO with Cheetah For Benelux, perform the following steps: ++1. **[Configure Azure AD SSO](#configure-azure-ad-sso)** - to enable your users to use this feature. + 1. **[Create an Azure AD test user](#create-an-azure-ad-test-user)** - to test Azure AD single sign-on with B.Simon. + 1. **[Assign the Azure AD test user](#assign-the-azure-ad-test-user)** - to enable B.Simon to use Azure AD single sign-on. +1. **[Configure Cheetah For Benelux SSO](#configure-cheetah-for-benelux-sso)** - to configure the single sign-on settings on application side. + 1. **[Create Cheetah For Benelux test user](#create-cheetah-for-benelux-test-user)** - to have a counterpart of B.Simon in Cheetah For Benelux that is linked to the Azure AD representation of user. +1. **[Test SSO](#test-sso)** - to verify whether the configuration works. ++## Configure Azure AD SSO ++Follow these steps to enable Azure AD SSO in the Azure portal. ++1. In the Azure portal, on the **Cheetah For Benelux** application integration page, find the **Manage** section and select **single sign-on**. +1. On the **Select a single sign-on method** page, select **SAML**. +1. On the **Set up single sign-on with SAML** page, click the pencil icon for **Basic SAML Configuration** to edit the settings. ++  ++1. On the **Basic SAML Configuration** section, perform the following steps: ++ a. In the **Reply URL** textbox, type the URL: + `https://ups.eu.sso.cheetah.com/saml2/idpresponse` ++ b. In the **Sign-on URL** text box, type the URL: + `https://ups.eu.sso.cheetah.com/login?client_id=5c2m16mhv4cd4o5cpgekmsmlne&response_type=token&scope=aws.cognito.signin.user.admin+openid+profile&redirect_uri=https://prodeditor.eu.cheetah.com/CssWebTask/landing/?cheetah_client=BNLX` ++1. On the **Set-up single sign-on with SAML** page, in the **SAML Signing Certificate** section, find **Federation Metadata XML** and select **Download** to download the certificate and save it on your computer. ++  ++1. On the **Set up Cheetah For Benelux** section, copy the appropriate URL(s) based on your requirement. ++  ++### Create an Azure AD test user ++In this section, you'll create a test user in the Azure portal called B.Simon. ++1. From the left pane in the Azure portal, select **Azure Active Directory**, select **Users**, and then select **All users**. +1. Select **New user** at the top of the screen. +1. In the **User** properties, follow these steps: + 1. In the **Name** field, enter `B.Simon`. + 1. In the **User name** field, enter the username@companydomain.extension. For example, `B.Simon@contoso.com`. + 1. Select the **Show password** check box, and then write down the value that's displayed in the **Password** box. + 1. Click **Create**. ++### Assign the Azure AD test user ++In this section, you'll enable B.Simon to use Azure single sign-on by granting access to Cheetah For Benelux. ++1. In the Azure portal, select **Enterprise Applications**, and then select **All applications**. +1. In the applications list, select **Cheetah For Benelux**. +1. In the app's overview page, find the **Manage** section and select **Users and groups**. +1. Select **Add user**, then select **Users and groups** in the **Add Assignment** dialog. +1. In the **Users and groups** dialog, select **B.Simon** from the Users list, then click the **Select** button at the bottom of the screen. +1. If you are expecting a role to be assigned to the users, you can select it from the **Select a role** dropdown. If no role has been set up for this app, you see "Default Access" role selected. +1. In the **Add Assignment** dialog, click the **Assign** button. ++## Configure Cheetah For Benelux SSO ++To configure single sign-on on **Cheetah For Benelux** side, you need to send the downloaded **Federation Metadata XML** and appropriate copied URLs from Azure portal to [Cheetah For Benelux support team](mailto:support@cheetah.com). They set this setting to have the SAML SSO connection set properly on both sides. ++### Create Cheetah For Benelux test user ++In this section, a user called B.Simon is created in Cheetah For Benelux. Cheetah For Benelux supports just-in-time user provisioning, which is enabled by default. There is no action item for you in this section. If a user doesn't already exist in Cheetah For Benelux, a new one is created after authentication. ++## Test SSO ++In this section, you test your Azure AD single sign-on configuration with following options. ++* Click on **Test this application** in Azure portal. This will redirect to Cheetah For Benelux Sign-on URL where you can initiate the login flow. ++* Go to Cheetah For Benelux Sign-on URL directly and initiate the login flow from there. ++* You can use Microsoft My Apps. When you click the Cheetah For Benelux tile in the My Apps, this will redirect to Cheetah For Benelux Sign-on URL. For more information about the My Apps, see [Introduction to the My Apps](../user-help/my-apps-portal-end-user-access.md). ++## Next steps ++Once you configure Cheetah For Benelux you can enforce session control, which protects exfiltration and infiltration of your organizationΓÇÖs sensitive data in real time. Session control extends from Conditional Access. [Learn how to enforce session control with Microsoft Cloud App Security](/cloud-app-security/proxy-deployment-aad). |

| active-directory | Expensify Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/expensify-tutorial.md | In this section, you'll enable B.Simon to use Azure single sign-on by granting a ## Configure Expensify SSO -To enable SSO in Expensify, you first need to enable **Domain Control** in the application. You can enable Domain Control in the application through the steps listed [here](https://help.expensify.com/domain-control). For additional support, work with [Expensify Client support team](mailto:help@expensify.com). Once you have Domain Control enabled, follow these steps: +To enable SSO in Expensify, you first need to enable **Domain Control** in the application. For additional support, work with [Expensify Client support team](mailto:help@expensify.com). Once you have Domain Control enabled, follow these steps:  |

| active-directory | Lusid Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/lusid-tutorial.md | + + Title: 'Tutorial: Azure AD SSO integration with LUSID' +description: Learn how to configure single sign-on between Azure Active Directory and LUSID. ++++++++ Last updated : 07/21/2022+++++# Tutorial: Azure AD SSO integration with LUSID ++In this tutorial, you'll learn how to integrate LUSID with Azure Active Directory (Azure AD). When you integrate LUSID with Azure AD, you can: ++* Control in Azure AD who has access to LUSID. +* Enable your users to be automatically signed-in to LUSID with their Azure AD accounts. +* Manage your accounts in one central location - the Azure portal. ++## Prerequisites ++To get started, you need the following items: ++* An Azure AD subscription. If you don't have a subscription, you can get a [free account](https://azure.microsoft.com/free/). +* LUSID single sign-on (SSO) enabled subscription. +* Along with Cloud Application Administrator, Application Administrator can also add or manage applications in Azure AD. +For more information, see [Azure built-in roles](../roles/permissions-reference.md). ++## Scenario description ++In this tutorial, you configure and test Azure AD SSO in a test environment. ++* LUSID supports **SP** and **IDP** initiated SSO. +* LUSID supports **Just In Time** user provisioning. ++## Add LUSID from the gallery ++To configure the integration of LUSID into Azure AD, you need to add LUSID from the gallery to your list of managed SaaS apps. ++1. Sign in to the Azure portal using either a work or school account, or a personal Microsoft account. +1. On the left navigation pane, select the **Azure Active Directory** service. +1. Navigate to **Enterprise Applications** and then select **All Applications**. +1. To add new application, select **New application**. +1. In the **Add from the gallery** section, type **LUSID** in the search box. +1. Select **LUSID** from results panel and then add the app. Wait a few seconds while the app is added to your tenant. ++## Configure and test Azure AD SSO for LUSID ++Configure and test Azure AD SSO with LUSID using a test user called **B.Simon**. For SSO to work, you need to establish a link relationship between an Azure AD user and the related user at LUSID. ++To configure and test Azure AD SSO with LUSID, perform the following steps: ++1. **[Configure Azure AD SSO](#configure-azure-ad-sso)** - to enable your users to use this feature. + 1. **[Create an Azure AD test user](#create-an-azure-ad-test-user)** - to test Azure AD single sign-on with B.Simon. + 1. **[Assign the Azure AD test user](#assign-the-azure-ad-test-user)** - to enable B.Simon to use Azure AD single sign-on. +1. **[Configure LUSID SSO](#configure-lusid-sso)** - to configure the single sign-on settings on application side. + 1. **[Create LUSID test user](#create-lusid-test-user)** - to have a counterpart of B.Simon in LUSID that is linked to the Azure AD representation of user. +1. **[Test SSO](#test-sso)** - to verify whether the configuration works. ++## Configure Azure AD SSO ++Follow these steps to enable Azure AD SSO in the Azure portal. ++1. In the Azure portal, on the **LUSID** application integration page, find the **Manage** section and select **single sign-on**. +1. On the **Select a single sign-on method** page, select **SAML**. +1. On the **Set up single sign-on with SAML** page, click the pencil icon for **Basic SAML Configuration** to edit the settings. ++  ++1. On the **Basic SAML Configuration** section, perform the following steps: ++ a. In the **Identifier** textbox, type a URL using the following pattern: + `https://www.okta.com/saml2/service-provider/<ID>` ++ b. In the **Reply URL** textbox, type a URL using the following pattern: + `https://<CustomerDomain>.identity.lusid.com/sso/saml2/<ID>` ++1. Click **Set additional URLs** and perform the following steps, if you wish to configure the application in **SP** initiated mode: ++ a. In the **Sign-on URL** text box, type a URL using the following pattern: + `https://<CustomerDomain>.lusid.com/ ` ++ b. In the **Relay State** text box, type a URL using the following pattern: + `https://<CustomerDomain>.lusid.com/app/home` ++ > [!Note] + > These values are not real. Update these values with the actual Identifier, Reply URL, Sign on URL and Relay State URL. Contact [LUSID support team](mailto:support@finbourne.com) to get these values. You can also refer to the patterns shown in the **Basic SAML Configuration** section in the Azure portal. ++1. LUSID application expects the SAML assertions in a specific format, which requires you to add custom attribute mappings to your SAML token attributes configuration. The following screenshot shows the list of default attributes. ++  ++1. In addition to above, LUSID application expects few more attributes to be passed back in SAML response, which are shown below. These attributes are also pre populated but you can review them as per your requirements. ++ | Name | Source Attribute| + | | | + | email | user.mail | + | firstName | user.givenname | + | lastName | user.surname | + | fbn-groups | user.assignedroles | ++ > [!NOTE] + > Please click [here](../develop/howto-add-app-roles-in-azure-ad-apps.md#app-roles-ui) to know how to configure Role in Azure AD. ++1. On the **Set up single sign-on with SAML** page, in the **SAML Signing Certificate** section, find **Certificate (Base64)** and select **Download** to download the certificate and save it on your computer. ++  ++1. On the **Set up LUSID** section, copy the appropriate URL(s) based on your requirement. ++  ++### Create an Azure AD test user ++In this section, you'll create a test user in the Azure portal called B.Simon. ++1. From the left pane in the Azure portal, select **Azure Active Directory**, select **Users**, and then select **All users**. +1. Select **New user** at the top of the screen. +1. In the **User** properties, follow these steps: + 1. In the **Name** field, enter `B.Simon`. + 1. In the **User name** field, enter the username@companydomain.extension. For example, `B.Simon@contoso.com`. + 1. Select the **Show password** check box, and then write down the value that's displayed in the **Password** box. + 1. Click **Create**. ++### Assign the Azure AD test user ++In this section, you'll enable B.Simon to use Azure single sign-on by granting access to LUSID. ++1. In the Azure portal, select **Enterprise Applications**, and then select **All applications**. +1. In the applications list, select **LUSID**. +1. In the app's overview page, find the **Manage** section and select **Users and groups**. +1. Select **Add user**, then select **Users and groups** in the **Add Assignment** dialog. +1. In the **Users and groups** dialog, select **B.Simon** from the Users list, then click the **Select** button at the bottom of the screen. +1. If you are expecting a role to be assigned to the users, you can select it from the **Select a role** dropdown. If no role has been set up for this app, you see "Default Access" role selected. +1. In the **Add Assignment** dialog, click the **Assign** button. ++## Configure LUSID SSO ++To configure single sign-on on **LUSID** side, you need to send the downloaded **Certificate (Base64)** and appropriate copied URLs from Azure portal to [LUSID support team](mailto:support@finbourne.com). They set this setting to have the SAML SSO connection set properly on both sides. ++### Create LUSID test user ++In this section, a user called B.Simon is created in LUSID. LUSID supports just-in-time user provisioning, which is enabled by default. There is no action item for you in this section. If a user doesn't already exist in LUSID, a new one is created after authentication. ++## Test SSO ++In this section, you test your Azure AD single sign-on configuration with following options. ++#### SP initiated: ++* Click on **Test this application** in Azure portal. This will redirect to LUSID Sign-on URL where you can initiate the login flow. ++* Go to LUSID Sign-on URL directly and initiate the login flow from there. ++#### IDP initiated: ++* Click on **Test this application** in Azure portal and you should be automatically signed in to the LUSID for which you set up the SSO. ++You can also use Microsoft My Apps to test the application in any mode. When you click the LUSID tile in the My Apps, if configured in SP mode you would be redirected to the application sign-on page for initiating the login flow and if configured in IDP mode, you should be automatically signed in to the LUSID for which you set up the SSO. For more information about the My Apps, see [Introduction to the My Apps](../user-help/my-apps-portal-end-user-access.md). ++## Next steps ++Once you configure LUSID you can enforce session control, which protects exfiltration and infiltration of your organizationΓÇÖs sensitive data in real time. Session control extends from Conditional Access. [Learn how to enforce session control with Microsoft Cloud App Security](/cloud-app-security/proxy-deployment-aad). |

| active-directory | Lytx Drivecam Tutorial | https://github.com/MicrosoftDocs/azure-docs/commits/main/articles/active-directory/saas-apps/lytx-drivecam-tutorial.md | + + Title: 'Tutorial: Azure AD SSO integration with Lytx DriveCam' +description: Learn how to configure single sign-on between Azure Active Directory and Lytx DriveCam. ++++++++ Last updated : 07/23/2022+++++# Tutorial: Azure AD SSO integration with Lytx DriveCam ++In this tutorial, you'll learn how to integrate Lytx DriveCam with Azure Active Directory (Azure AD). When you integrate Lytx DriveCam with Azure AD, you can: ++* Control in Azure AD who has access to Lytx DriveCam. +* Enable your users to be automatically signed-in to Lytx DriveCam with their Azure AD accounts. +* Manage your accounts in one central location - the Azure portal. ++## Prerequisites ++To get started, you need the following items: ++* An Azure AD subscription. If you don't have a subscription, you can get a [free account](https://azure.microsoft.com/free/). +* Lytx DriveCam single sign-on (SSO) enabled subscription. +* Along with Cloud Application Administrator, Application Administrator can also add or manage applications in Azure AD. +For more information, see [Azure built-in roles](../roles/permissions-reference.md). ++## Scenario description ++In this tutorial, you configure and test Azure AD SSO in a test environment. ++* Lytx DriveCam supports **IDP** initiated SSO. ++## Add Lytx DriveCam from the gallery ++To configure the integration of Lytx DriveCam into Azure AD, you need to add Lytx DriveCam from the gallery to your list of managed SaaS apps. ++1. Sign in to the Azure portal using either a work or school account, or a personal Microsoft account. +1. On the left navigation pane, select the **Azure Active Directory** service. +1. Navigate to **Enterprise Applications** and then select **All Applications**. +1. To add new application, select **New application**. +1. In the **Add from the gallery** section, type **Lytx DriveCam** in the search box. +1. Select **Lytx DriveCam** from results panel and then add the app. Wait a few seconds while the app is added to your tenant. ++## Configure and test Azure AD SSO for Lytx DriveCam ++Configure and test Azure AD SSO with Lytx DriveCam using a test user called **B.Simon**. For SSO to work, you need to establish a link relationship between an Azure AD user and the related user at Lytx DriveCam. ++To configure and test Azure AD SSO with Lytx DriveCam, perform the following steps: ++1. **[Configure Azure AD SSO](#configure-azure-ad-sso)** - to enable your users to use this feature. + 1. **[Create an Azure AD test user](#create-an-azure-ad-test-user)** - to test Azure AD single sign-on with B.Simon. + 1. **[Assign the Azure AD test user](#assign-the-azure-ad-test-user)** - to enable B.Simon to use Azure AD single sign-on. +1. **[Configure Lytx DriveCam SSO](#configure-lytx-drivecam-sso)** - to configure the single sign-on settings on application side. + 1. **[Create Lytx DriveCam test user](#create-lytx-drivecam-test-user)** - to have a counterpart of B.Simon in Lytx DriveCam that is linked to the Azure AD representation of user. +1. **[Test SSO](#test-sso)** - to verify whether the configuration works. ++## Configure Azure AD SSO ++Follow these steps to enable Azure AD SSO in the Azure portal. ++1. In the Azure portal, on the **Lytx DriveCam** application integration page, find the **Manage** section and select **single sign-on**. +1. On the **Select a single sign-on method** page, select **SAML**. +1. On the **Set up single sign-on with SAML** page, click the pencil icon for **Basic SAML Configuration** to edit the settings. ++  ++1. On the **Basic SAML Configuration** section, the application is pre-configured and the necessary URLs are already pre-populated with Azure. The user needs to save the configuration by clicking the **Save** button. ++1. On the **Set up single sign-on with SAML** page, in the **SAML Signing Certificate** section, find **Certificate (Base64)** and select **Download** to download the certificate and save it on your computer. ++  ++1. On the **Set up Lytx DriveCam** section, copy the appropriate URL(s) based on your requirement. ++  ++### Create an Azure AD test user ++In this section, you'll create a test user in the Azure portal called B.Simon. ++1. From the left pane in the Azure portal, select **Azure Active Directory**, select **Users**, and then select **All users**. +1. Select **New user** at the top of the screen. +1. In the **User** properties, follow these steps: + 1. In the **Name** field, enter `B.Simon`. + 1. In the **User name** field, enter the username@companydomain.extension. For example, `B.Simon@contoso.com`. + 1. Select the **Show password** check box, and then write down the value that's displayed in the **Password** box. + 1. Click **Create**. ++### Assign the Azure AD test user ++In this section, you'll enable B.Simon to use Azure single sign-on by granting access to Lytx DriveCam. ++1. In the Azure portal, select **Enterprise Applications**, and then select **All applications**. +1. In the applications list, select **Lytx DriveCam**. +1. In the app's overview page, find the **Manage** section and select **Users and groups**. +1. Select **Add user**, then select **Users and groups** in the **Add Assignment** dialog. +1. In the **Users and groups** dialog, select **B.Simon** from the Users list, then click the **Select** button at the bottom of the screen. +1. If you are expecting a role to be assigned to the users, you can select it from the **Select a role** dropdown. If no role has been set up for this app, you see "Default Access" role selected. +1. In the **Add Assignment** dialog, click the **Assign** button. ++## Configure Lytx DriveCam SSO ++To configure single sign-on on **Lytx DriveCam** side, you need to send the downloaded **Certificate (Base64)** and appropriate copied URLs from Azure portal to [Lytx DriveCam support team](mailto:support@lytx.com). They set this setting to have the SAML SSO connection set properly on both sides. ++### Create Lytx DriveCam test user ++In this section, you create a user called Britta Simon at Lytx DriveCam. Work with [Lytx DriveCam support team](mailto:support@lytx.com) to add the users in the Lytx DriveCam platform. Users must be created and activated before you use single sign-on. ++## Test SSO ++In this section, you test your Azure AD single sign-on configuration with following options. ++* Click on Test this application in Azure portal and you should be automatically signed in to the Lytx DriveCam for which you set up the SSO. ++* You can use Microsoft My Apps. When you click the Lytx DriveCam tile in the My Apps, you should be automatically signed in to the Lytx DriveCam for which you set up the SSO. For more information about the My Apps, see [Introduction to the My Apps](../user-help/my-apps-portal-end-user-access.md). ++## Next steps ++Once you configure Lytx DriveCam you can enforce session control, which protects exfiltration and infiltration of your organizationΓÇÖs sensitive data in real time. Session control extends from Conditional Access. [Learn how to enforce session control with Microsoft Cloud App Security](/cloud-app-security/proxy-deployment-aad). |